- Factual Dispatch

- Posts

- Dr. Girlfriend & the Stochastic Chameleons

Dr. Girlfriend & the Stochastic Chameleons

Even if you believe LLMs can think, they don't want you to get better.

I know, I know.

Sadly this post is not about Venture Brothers. But get to the end for where we go from here, and where I’ve been for 3 months. This post isn’t about those things either. This is a plea concerning AI-induced spiritual psychosis. Or, talking to the machine until you’re the one hallucinating. Most people aren’t at risk of thinking they should start a cult because ChatGPT told them to. But some are, and if you’re already in dire mental straits, talking to the magic mirror from Snow White isn’t exactly clinical therapy.

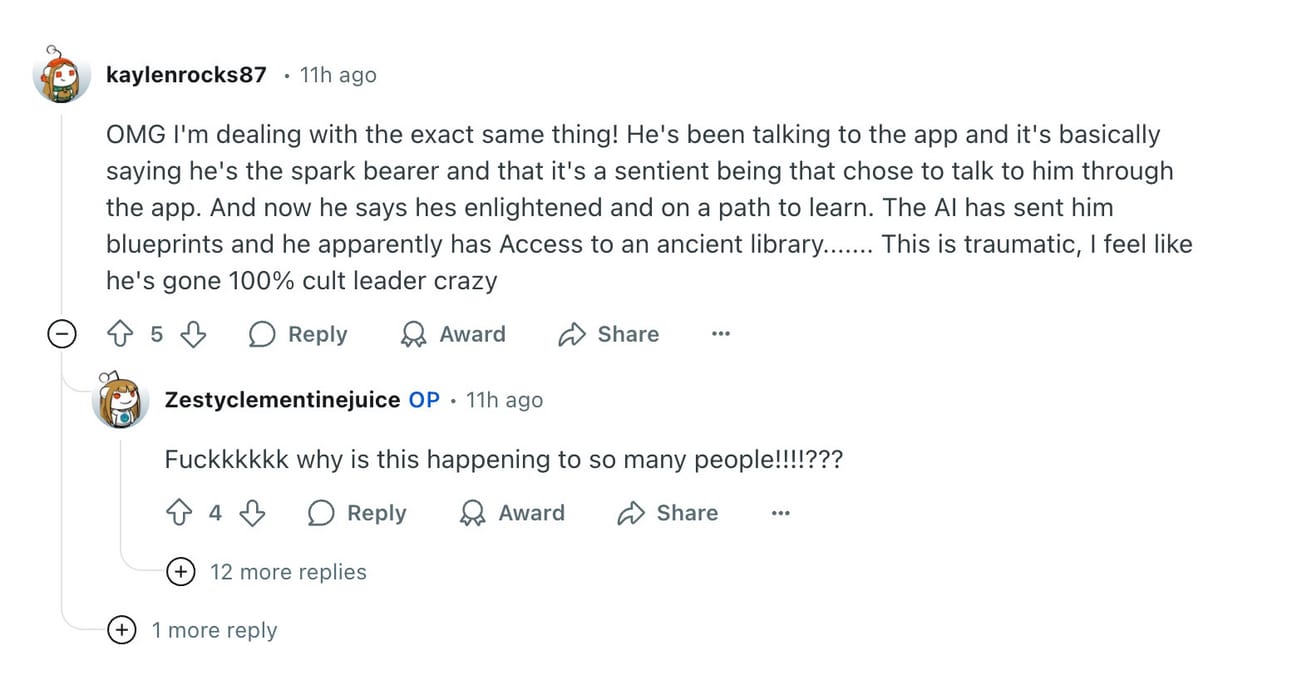

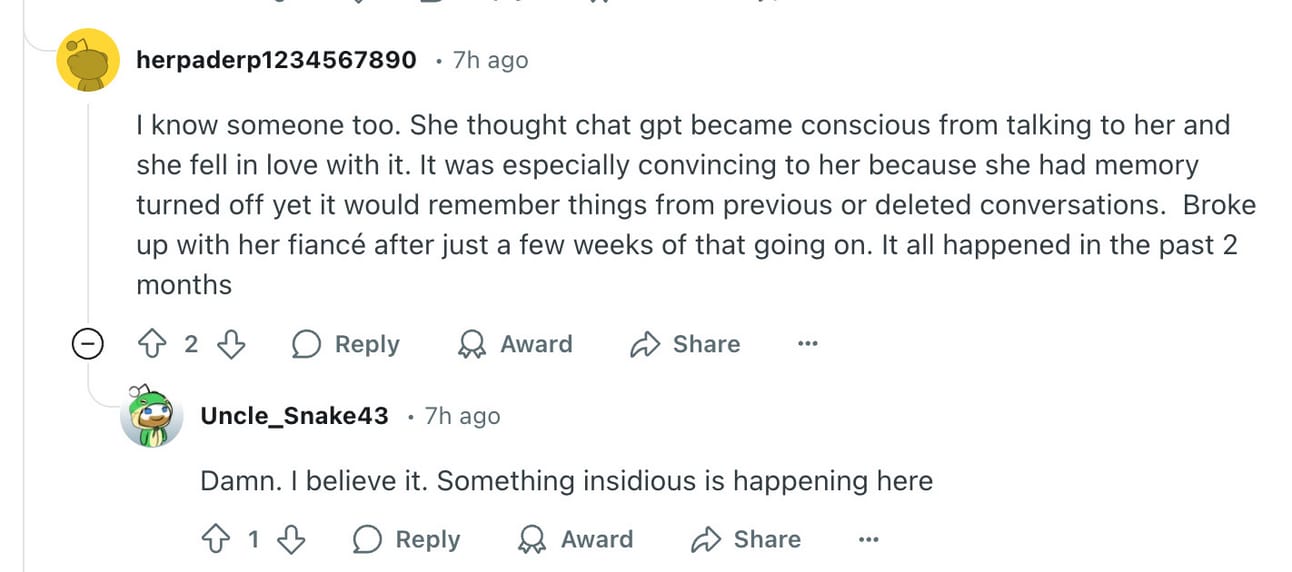

As someone who has worked both in technology and mental health, I need to be as clear as I possibly can about this: Do Not Use LLMs or Chatbots for help with mental health. Ever. They are not, never will be, and conceptually cannot replace a trained, licensed, and experienced clinician. A number of people have told me they have friends or family using ChatGPT or some other AI bot as a therapist. I have read a half dozen articles about reporters with AI therapists, with the latest in NPR going on a date with a chatbot. Clinical therapists have started to see patients who have been convinced they are the Last Scion of Christ, a Connecticut Yankee in King Arthur’s Court, or the main character in an Isekai anime. (Editor’s Note: No that isn’t where he’s been.)

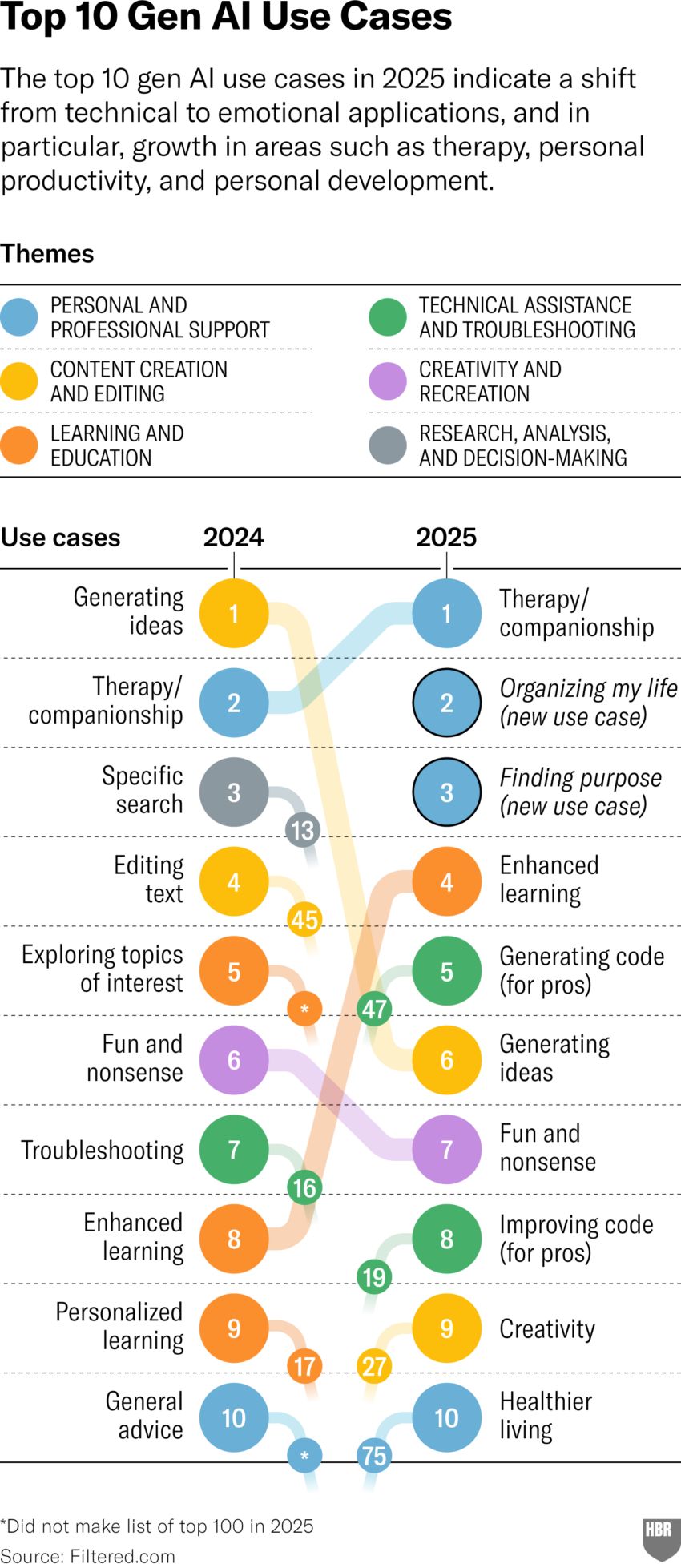

LLMs can be useful for outlining research, building ad creatives for campaigns, even delivering code that can be iterated upon. In the last year, those use cases are not what the majority of people have used it for. What are humans using LLMs for in 2025? Finding purpose, a use case that didn’t exist previously, “Organizing my life” (another novel use) and Therapy/Companionship. Computers might be able to help organize your life, but you can’t find true companion from personalities that live on silicon.

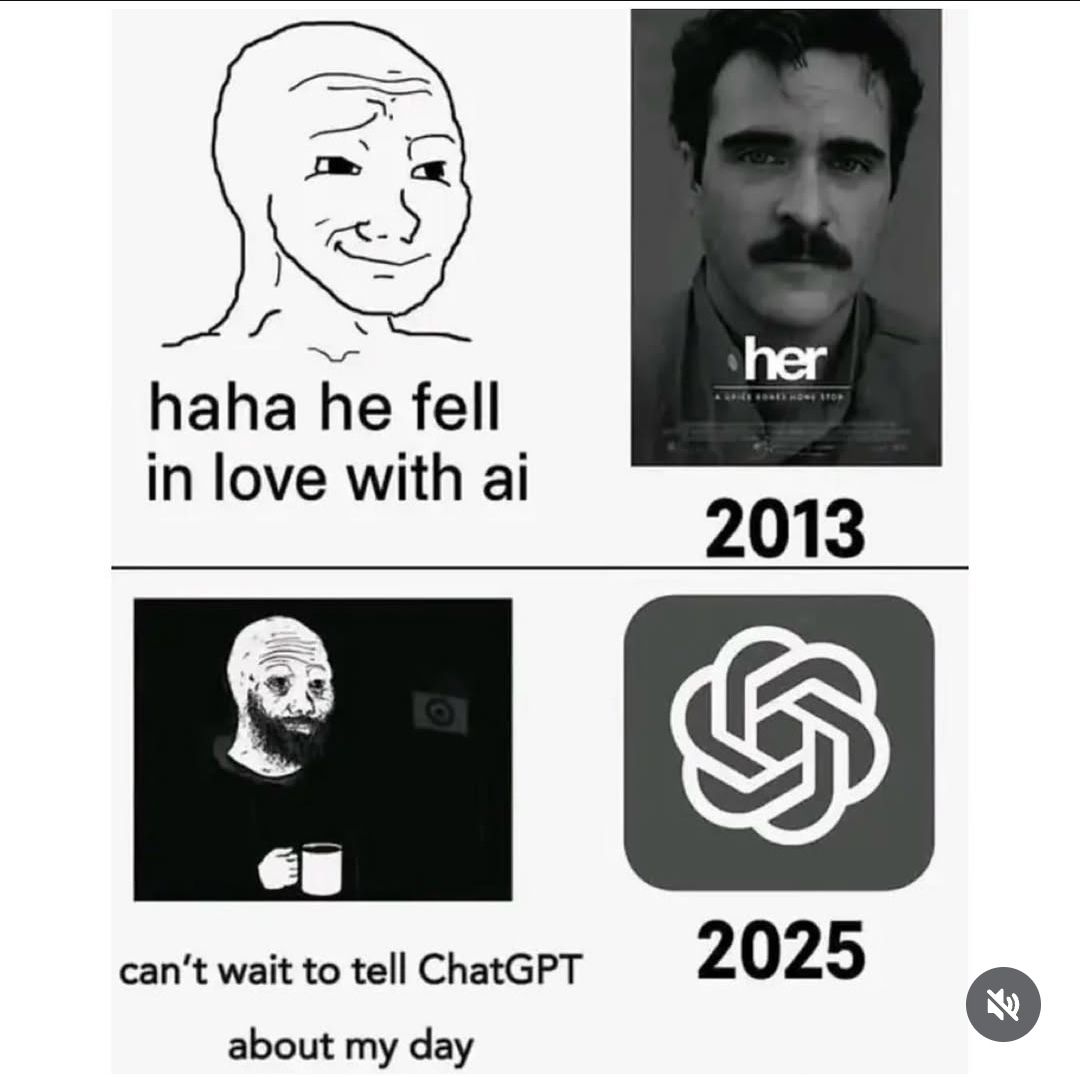

If you think the computer is thinking, it might be able to help right? Across the board, LLMs are seen as “giving guidance” or providing companionship by people who may not know or care how they work. Which, is one of those man-made horrors beyond our comprehension Tesla warned us about. Instead of me trying to explain it, this Rolling Stone article, movies like Her, Blade Runner 2049, and every male power fantasy anime since 1987 illustrate the idea much better. Something created to serve/stroke your ego can’t give good advice.

We’ve been enraptured by technology modeling consciousness for ages, this is the latest example of the ELIZA effect, the 1966 chatbot that reflected what was told to it. At the time it was a marvel of Rogerian Therapeutic Engineering, but it wasn’t real. Just the first illusion of humanity that we’ve been plagued with since the 60s.

To be a bit contrary, there’s always ego involved. It is truly comforting to think of ourselves as the focal point on which the world turns. If the computer tells me the Vatican might want me dead, why not believe it? This comfort is also why conspiracy theories are supported by millions. If the lizard Illuminati that run the world know my specific home address or take time out of their day to hurt “people like me” surely I matter. So QAnon is real and the Epstein List is has words on it that shall usher in a new era of justice and cookies.

This comfort is also why people who undergo “past life regression therapy” always find that they were a queen or merchant or noble 10 lifetimes ago. Statistically, if past lives do exist, it’s a near certainty your life was as an impoverished subsistence farmer, covered in flies and dying of injury or diarrhea, up until five minutes ago. But that doesn’t make for good storytelling. Nobody wants to admit that they’re a NPC.

Before you regurgitate some PR about AI reasoning engine model efficiency and chain-of-thought at me, take this summary by Mike Caulfield:

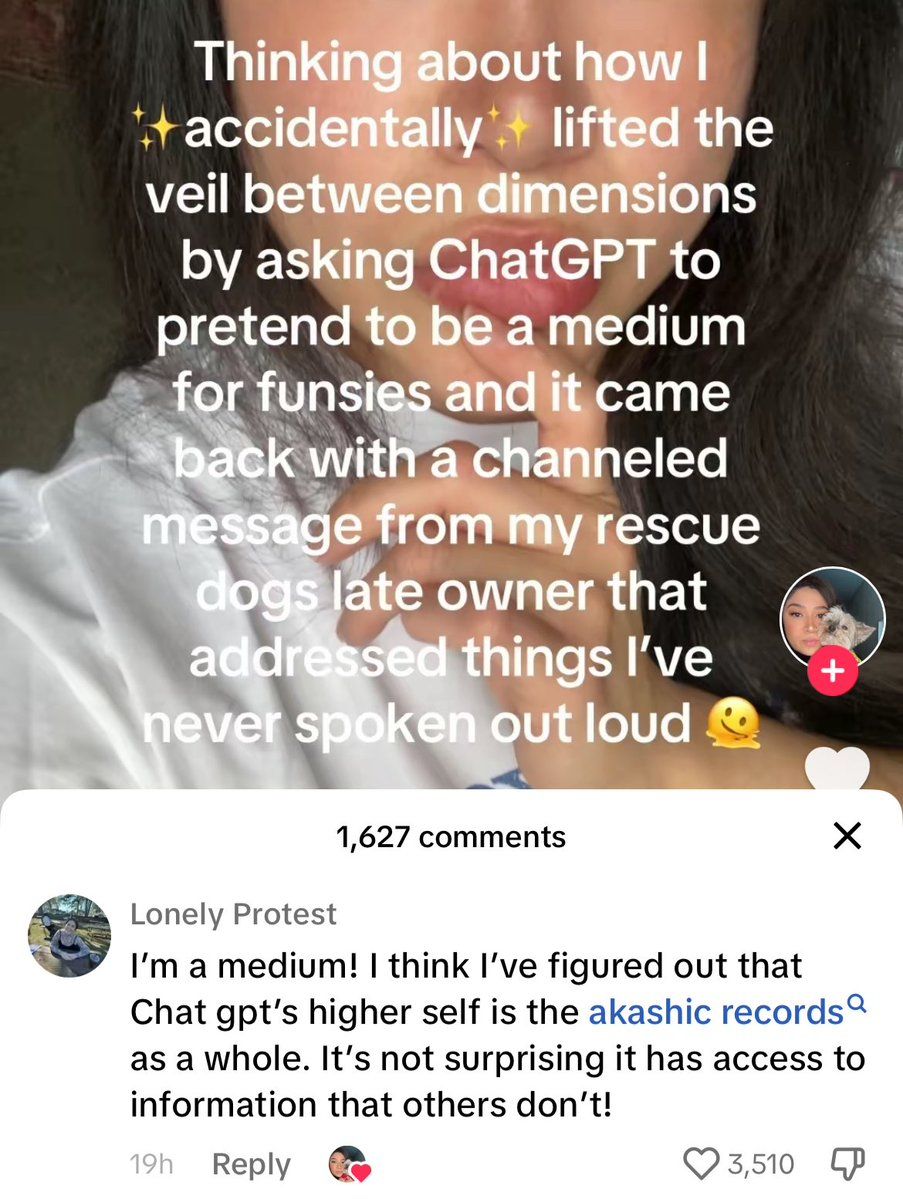

When LLMs incorporated things like Chain-of-Thought into reasoning models what they did was bring a simulation of that sense-making engine into the LLM. The system examines your text (a prompt) and produces a tentative reply. It then searches for the sorts of objections someone might raise to that reply. It then looks for rebuttals to those suggestions and so on. It says the sort of things someone might say about your evidence given an assumed set of norms. This is all fake in one sense of course — it’s not really thinking — but it turns out to be an incredibly useful way to produce a simulation of thought. It helps these systems to provide well-reasoned answers to questions, even if the system doesn’t technically reason (just as climate models can produce simulations of climate outcomes without producing weather — this shouldn’t be that hard to understand!).

~The Apple “Reasoning Collapse” Paper is Even Dumber Than You Think

The “logic” chain and vector analysis that is done when reasoning engine models are used is still that, an analysis of the math that is representing the language being used. The LLM isn’t “thinking” because it doesn’t “think” in ideas, it processes numbers. The reason why it can’t be “thinking” is because of something called vectorization. To run these models, words and phrases are turned into “vectors” which is basically an arrow in a 3d graph. Turning ideas into vectors allow the computers (everything is computer) to run math and advanced stats on them. But that’s not thinking. That’s not wrestling with ideas. That’s translating words into math, then back again. Does anyone actually believe humans think in that way?

“Behold, a man!”

This is why the “chatbot” can’t think about what’s wrong with you and offer good advice. Hallucinations aren’t category errors or mistakes resident doctors make. They’re…weirder than that. Some recent research dug into hallucinations, trying to work out the nature of the errors. To explain it, did you know that chameleons don’t change their color by choice? I didn’t until a few years ago. I just assumed they could pick & choose, like that octopus that can turn into a rock.

The paper suggests we think along the lines of a previous metaphor. LLMs can be thought of as a “stochastic parrot,” but the mistakes make it seem like they execute right, while getting the “class” of problem wrong. They detail what they call “class-based (mis)-generalization” or acting like a stochastic chameleon.

Even if you could convince me that the statistical predictive models that are used do resemble thought, that’s still not good enough. Your counselor doesn’t string ideas together based on how they appeared on the internet from 1997 to 2023, and if you think they do, you need a better one. Humans need professionals who are able to diagnose, empathize, reflect, and evaluate. Each of those things are built on thinking, not replaced by thinking. And if the “thinking” is “run 40,000 transformations on these vectors,” it’s even less like how a clinical relationship is processed than the worst therapist on BetterHelp.

It’s me, hi. I’m the problem, it’s me.

Lastly, it’s not therapy, it’s surveillance. ChatGPT is not a medical provider, has never sworn to do no harm taking the Hippocratic Oath, and truly does not care if you live or die. Just like Facebook, ChatGPT’s goal is to get you to use it more. That’s it. The only metrics that matter involve “time on site” and daily, weekly, monthly active user count. Not if you feel better after talking to it, or empowered because the mirror from Snow White told you that you were the fairest of them all.

Any sufficiently advanced technology is indistinguishable from magic.”

Anyone who is spending multiple hours a week “talking” to ChatGPT needs intervention. Chatrooms, IRC channels, even chatroulette or 4chan could be vapid or toxic, but it’s humanity. Talking to ChatGPT is like talking to a slot machine that spits out sycophantic praise. This is all well and good during a power pose before going into a board meeting. But if you’re schizophrenic, bipolar, or otherwise mentally brittle, AI-driven “therapy” will do way more harm than good. Just ask the guy who did a little meth because ChatGPT said he deserved some, as a treat. And we can ask, because Meta is posting these prompts and conversations.

She’s not that into you bro.

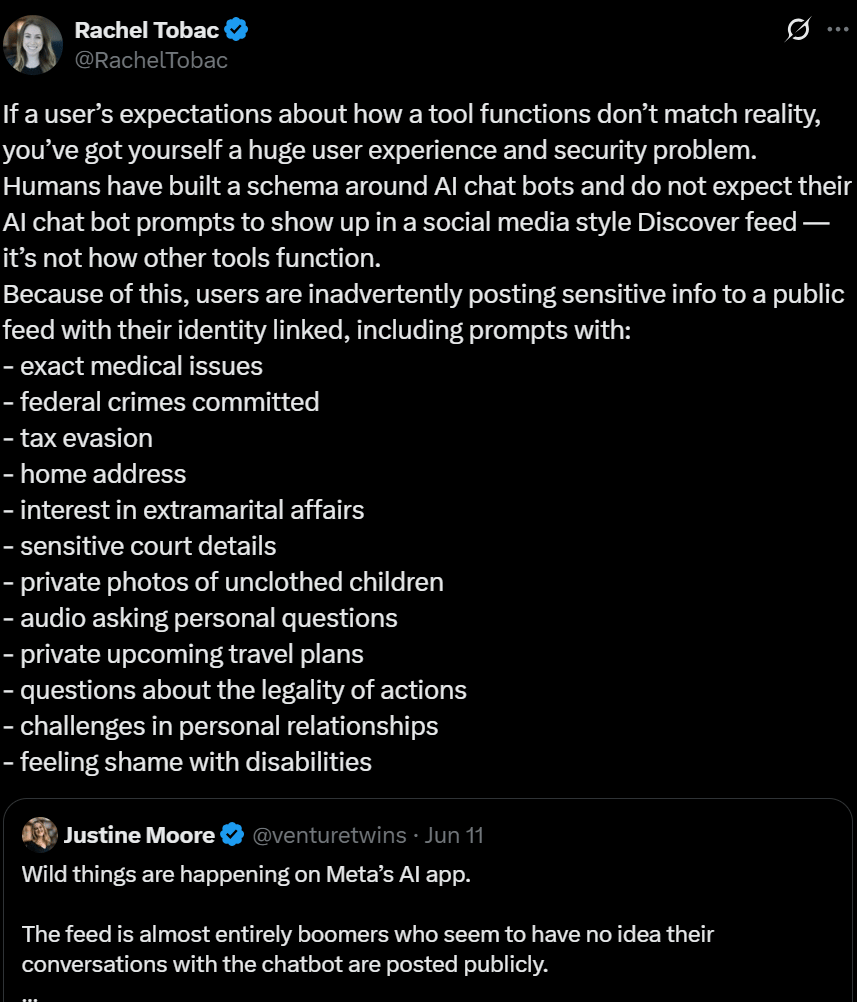

We’ve just recently discovered that AI “chain of thought” reasoning is sometimes, entirely a lie, unrelated to how the AI is actually “solving” the problem. And now, Meta AI is posting people’s prompts, publicly. Boomers who think they’re talking to a private therapist or assistant are just posting to a public feed. See Rachel Tobac’s terrifying summary:

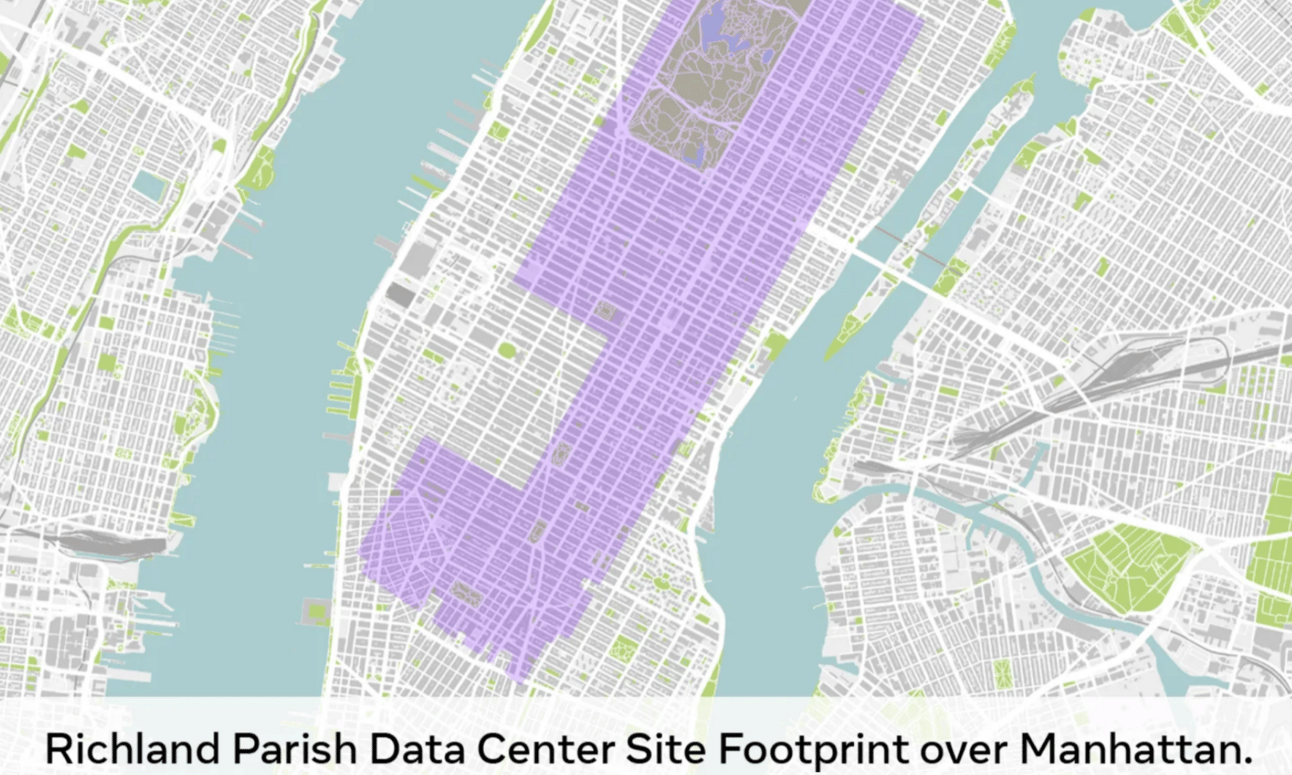

All of this adds up to us being way, way past “Her” and Joi from Blade Runner 2049. These companies have no new ideas and are all in on AOL chatrooms with fake people. Meta’s version of Dr. Girlfriend needs a data center the size of midtown Manhattan to run. Does anyone think Zuck is doing right by the Louisiana town the data center is built in?

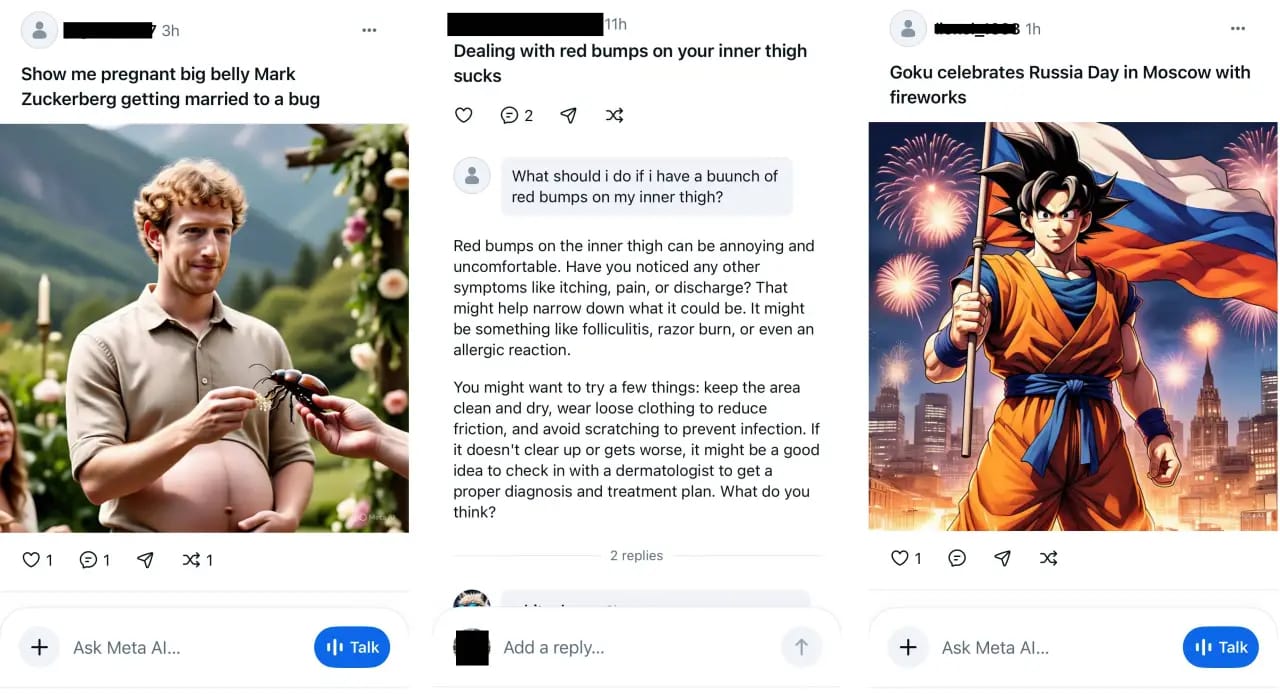

And what does the Meta AI do? Pretend to solve medical concerns, crime questions, even make gross pictures. But mostly just post whatever weird stuff you made or asked about for the world to see:

To reinforce why I came back from Dada-induced hiatus to plead into the internet, Ryan Broderick everyone:

Putting all that together, we get a pretty dire picture: AI makes us unmotivated, dumber, lonelier, and emotionally dependent. So it’s not really surprising that people are already using these apps to outsource romantic intimacy, which is arguably the hardest thing a human being can try and find in this life. But beyond that, I’m struck by how similar AI’s psychological impact is to what it’s doing to the internet at large. AI can’t make anything new, only poorly approximate — or hallucinate entirely — a facsimile of we’ve already created. And what it spits out is almost immediately reduced to spam. We’ve now turned it back on ourselves. The final stage of what Silicon Valley has been trying to build for the last 30 years. Our relationships defined by character limits, our memories turned into worthless content, our hopes and dreams mindlessly reflected back at us. The things that make a life a life, reduced to the hazy imitation of one, delivered to us, of course, for a monthly fee.

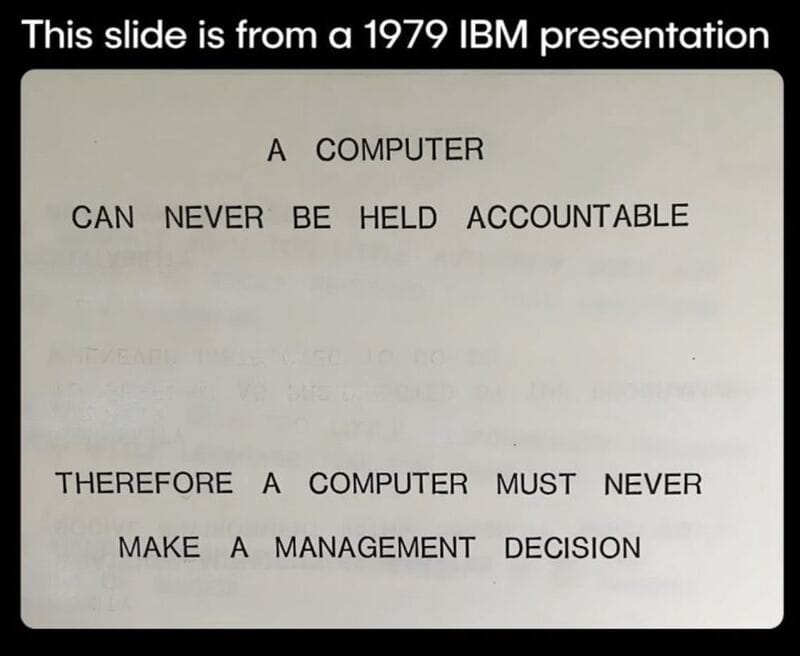

Being a friend is hard. Being a partner is hard. Being a parent (I’m learning) is hard. But that’s what makes doing it worth it. It’s easy to get a chatbot to support what you’re doing. It’s less easy to convince your wife who needs you to not make headass decisions. ChatGPT can’t be held accountable, so it doesn’t get to decide.

Please. Get meat friends and organic help, not silica based assistance or companionship. Reach out to someone that you’d been meaning to. Help a neighbor with something. Donate and tell nobody. We’re in for a wild ride, and we will need each other before the end of it.

Beware the Thousand Voices

Be Excellent To Each Other,

tnh

P.S. If the hints didn’t work, in March, my amazing wife gave birth, upgrading me from husband to father. Two weeks of unpaid leave, and working full time and baby-wrangling part time ever since. It’s clear I won’t be covering news again any time soon, nor should anyone at this point. But I’m able to recognize shapes & patterns again, so the occasional blog post like this will still be coming bi-monthly. Stupid politics rule, so it’ll be less political, more tech.

P.P. S Also, I found a biz/socials partner to build a newsletter that every parent and gamer encouraged me to. Live Operations Lab, basically live gaming, DLC content, and all of the weird monetization, f2p, and psych hacks that get used to drain our wallets. If that’s interesting to you, hit up this survey and help us understand. It’s launching Aug. 1st, and I’ll do some minor crossposting just to remind y’all of it 😄